Weaviate

Quick Summary

DeepEval allows you to evaluate your Weaviate retriever and optimize retrieval hyperparameters like top-K, embedding model, and similarity function.

To get started, install Weaviate’s Python client using the following command:

pip install weaviate-client

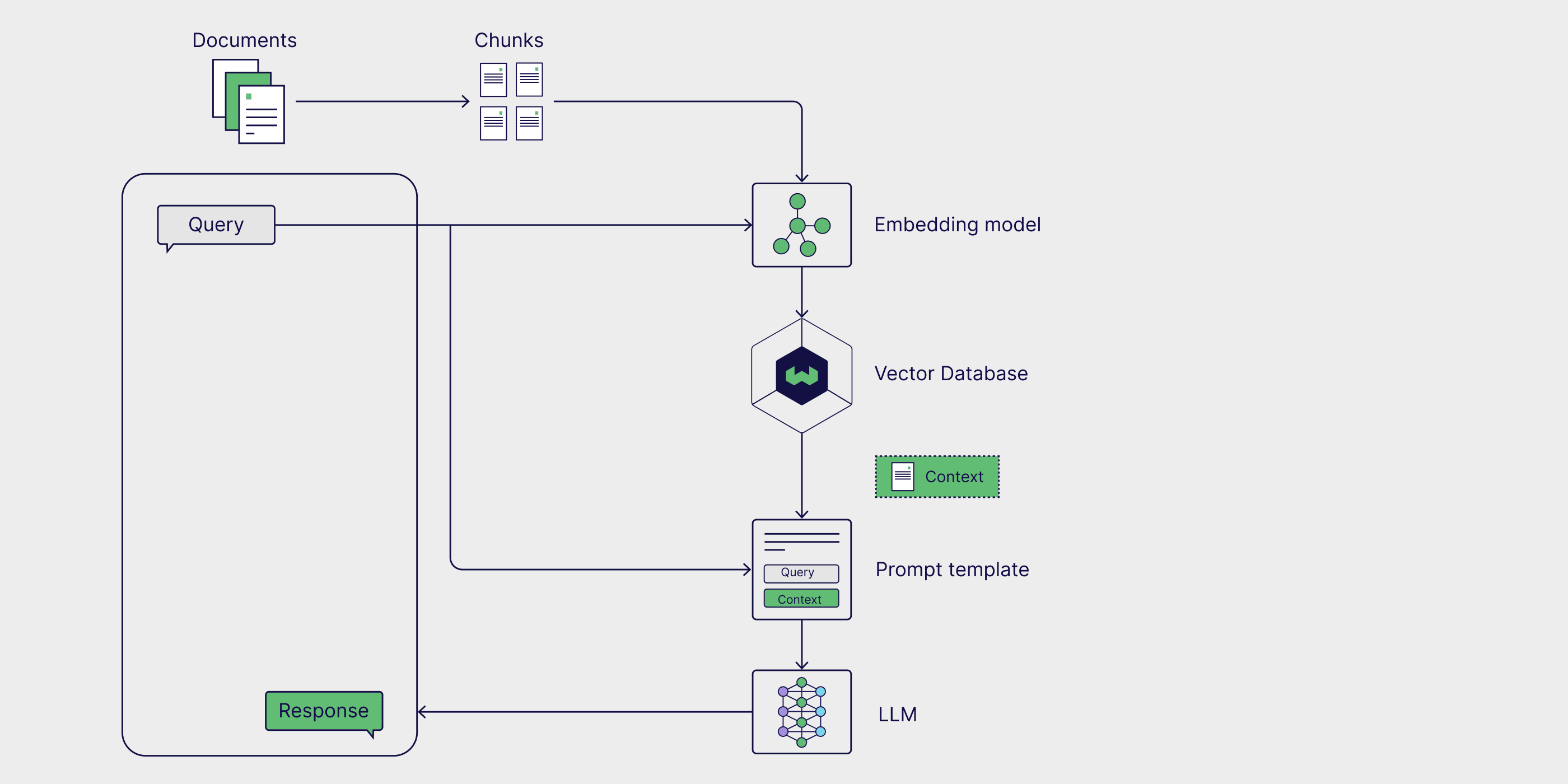

Weaviate is a powerful and scalable vector database designed for high-performance semantic search in RAG applications. It provides an intuitive API for managing embeddings, performing hybrid search, and integrating with LLMs. Learn more about Weaviate here.

This diagram illustrates how the Weaviate vector database (retriever) fits into your RAG pipeline as a retriever.

Setup Weaviate

To get started, connect to your local or cloud-hosted Weaviate instance.

import weaviate

import os

client = weaviate.Client(

url="http://localhost:8080", # Change this if using Weaviate Cloud

auth_client_secret=weaviate.AuthApiKey(os.getenv("WEAVIATE_API_KEY")) # Set your API key

)

Next, create a Weaviate schema with the correct class definition to store text and vector properties for similarity search.

# Define the schema

class_name = "Document"

if not client.schema.exists(class_name):

schema = {

"classes": [

{

"class": class_name,

"vectorizer": "none", # Using an external embedding model

"properties": [

{"name": "text", "dataType": ["text"]}, # Stores chunk text

]

}

]

}

client.schema.create(schema)

Finally, define an embedding model to embed your document chunks before adding them to Weaviate for retrieval.

# Load an embedding model

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("all-MiniLM-L6-v2")

# Example document chunks

document_chunks = [

"Weaviate is a powerful vector database.",

"RAG improves AI-generated responses with retrieved context.",

"Vector search enables high-precision semantic retrieval.",

...

]

# Store chunks with embeddings

with client.batch as batch:

for i, chunk in enumerate(document_chunks):

embedding = model.encode(chunk).tolist() # Convert text to vector

batch.add_data_object(

{"text": chunk}, class_name=class_name, vector=embedding

)

To use Weaviate as the vector database and retriever in your RAG pipeline, query your collection to retrieve relevant context based on user input and incorporate it into your prompt template. This ensures your model has the necessary information for accurate and well-informed responses.

Evaluating Weaviate Retrieval

Evaluating your Weaviate retriever consists of 2 steps:

- Preparing an

inputquery along with the expected LLM response, and using theinputto generate a response from your RAG pipeline to create anLLMTestCasecontaining the input, actual output, expected output, and retrieval context. - Evaluating the test case using a selection of retrieval metrics.

An LLMTestCase allows you to create unit tests for your LLM applications, helping you identify specific weaknesses in your RAG application.

Preparing your Test Case

The first step in generating a response from your RAG pipeline is retrieving the relevant retrieval_context from your Weaviate collection. Perform this retrieval for your input query.

def search(query):

query_embedding = model.encode(query).tolist()

result = client.query.get("Document", ["text"]) \

.with_near_vector({"vector": query_embedding}) \

.with_limit(3) \

.do()

return [hit["text"] for hit in result["data"]["Get"]["Document"]] if result["data"]["Get"]["Document"] else None

query = "How does Weaviate work?"

retrieval_context = search(query)

Next, pass the retrieved context into your LLM's prompt template to generate a response.

prompt = """

Answer the user question based on the supporting context.

User Question:

{input}

Supporting Context:

{retrieval_context}

"""

actual_output = generate(prompt) # Replace with your LLM function

print(actual_output)

Let's examine the actual_output generated by our RAG pipeline:

Weaviate is a scalable vector database optimized for semantic search and retrieval.

Finally, create an LLMTestCase using the input and expected output you prepared, along with the actual output and retrieval context you generated.

from deepeval.test_case import LLMTestCase

test_case = LLMTestCase(

input=input,

actual_output=actual_output,

retrieval_context=retrieval_context,

expected_output="Weaviate is a powerful vector database for AI applications, optimized for efficient semantic retrieval.",

)

Running Evaluations

To run evaluations on the LLMTestCase, we first need to define relevant deepeval metrics to assess the Weaviate retriever: contextual recall, contextual precision, and contextual relevancy.

These contextual metrics help assess your retriever. For more retriever evaluation details, check out this guide.

from deepeval.metrics import (

ContextualRecallMetric,

ContextualPrecisionMetric,

ContextualRelevancyMetric,

)

contextual_recall = ContextualRecallMetric(),

contextual_precision = ContextualPrecisionMetric()

contextual_relevancy = ontextualRelevancyMetric()

Finally, pass the test case and metrics into the evaluate function to begin the evaluation.

from deepeval import evaluate

evaluate(

[test_case],

metrics=[contextual_recall, contextual_precision, contextual_relevancy]

)

Improving Weaviate Retrieval

Below is a table outlining the hypothetical metric scores for your evaluation run.

Metric | Score |

|---|---|

| Contextual Precision | 0.85 |

| Contextual Recall | 0.92 |

| Contextual Relevancy | 0.44 |

Each contextual metric evaluates a specific hyperparameter. To learn more about this, read this guide on RAG evaluation.

To improve your Weaviate retriever, you'll need to experiment with various hyperparameters and prepare LLMTestCases using generations from different retriever versions.

Ultimately, analyzing improvements and regressions in contextual metric scores (the three metrics defined above) will help you determine the optimal hyperparameter combination for your Weaviate retriever.

For a more detailed guide on tuning your retriever’s hyperparameters, check out this guide.